6 Reasons to Track Skills Development

Whether we realize it or not, machine learning (ML) and artificial intelligence (AI) are touching lives every day. Credit-card companies use ML/AI models to check for fraud in real-time; retailers use them to entice customers with additional purchases, and linguists use them to translate speech. Airlines are experimenting with them to check in passengers using advanced facial recognition; and researchers are using them to analyze the human genome for cancer indicators. These are just a few examples of how we are using ML and AI to improve business processes and make the world a better place to live.

One of the primary challenges facing software developers today is becoming knowledgeable enough about ML and AI to incorporate them into the applications that they write. Case in point: Wintellect was recently asked by a customer to build an application that examines millions of scanned documents and identifies the ones that contain redactions. Ten years ago, this would have been exceedingly difficult. Today, thanks to advances in AI, it’s a reasonable ask – assuming you have the knowledge and skills to make it happen.

AI expedites the image identification process

Examining document scans or photographs and asking questions such as “does this image contain redacted text?” is an image-classification task. Image classification is one area in which AI excels. Specifically, a special type of neural network known as a convolutional neural network (CNN) has proven to be more adept than humans in some cases at organizing images. However, building a CNN requires deep technical expertise, and training it from scratch to classify images requires vast amounts of computing power that is beyond the reach of the average developer.

Enter the cloud. Major cloud providers, including Microsoft, Amazon, and Google, make ML and AI available as a service by exposing pre-trained models through REST APIs. These APIs make it possible to analyze text for sentiment, build chat-bots, translate speech into other languages, identify objects in photos, identify faces in photographs, and quantify emotion in those faces, with little or no training in ML and AI. They make it possible to build sophisticated image-classification models backed by CNNs, too.

Furthermore, Microsoft’s Custom Vision Service, which belongs to a family of services and APIs known as Azure Cognitive Services, enables developers who have little or no formal training in machine learning and artificial intelligence to build image-classification models and incorporate them into their software.

How to use AI to differentiate between images of dogs and cats

Suppose you wanted to use software to determine whether a photo contains a dog or a cat. First, you assemble one set of training photos containing dogs, and another set containing cats. A large number of images is best. However, due to some advanced techniques used by the Custom Vision Service, you can frequently achieve acceptable accuracy with as few as 50 to 100 photos representing each class. Then you navigate to the Custom Vision Service portal in your browser, create a new project, upload the training photos, and assign each group of photos a label such as “dog” or “cat.” Clicking a button trains the model in the cloud. Training usually takes a few minutes or less.

Once you train the model, you use the portal to “publish” the model and expose a REST endpoint that is called with an image or image URL. The data returned in the call indicates the probability that the picture contains a dog or a cat. If you properly train the model, the results can be astonishingly accurate. If you’re writing the app for a smartphone or other mobile device and need it to work even when an internet connection isn’t available, the Custom Vision Service includes options for downloading trained models and embedding them in your apps.

The beauty of REST calls is that they travel over HTTPS and work with virtually any programming language. For certain languages such as C#, Microsoft makes free SDKs available for simplifying calls to a trained Custom Vision Service model.

Here’s the complete C# code for invoking the Custom Vision Service to determine whether an image contains a dog or a cat:

CustomVisionPredictionClient client = new CustomVisionPredictionClient()

{

ApiKey = _key,

Endpoint = _endpoint

};

await client.ClassifyImageUrlAsync(_id, _name, new ImageUrl(_imageUrl));

var isDog = result.Predictions.FirstOrDefault

(x => x.TagName.ToLowerInvariant() == "dog").Probability;

var isCat = result.Predictions.FirstOrDefault

(x => x.TagName.ToLowerInvariant() == "cat").Probability;

In this example, _key is an authentication key obtained from the Custom Vision Service, _endpoint is the URL of the REST endpoint (also obtained from the Custom Vision Service), and _imageUrl is the URL of the image to be analyzed. At the end, the variable named isDog holds a floating-point value indicating the probability that the image contains a dog, while isCat holds a floating-point value with the probability that the image contains a cat. Couldn’t be much simpler than that!

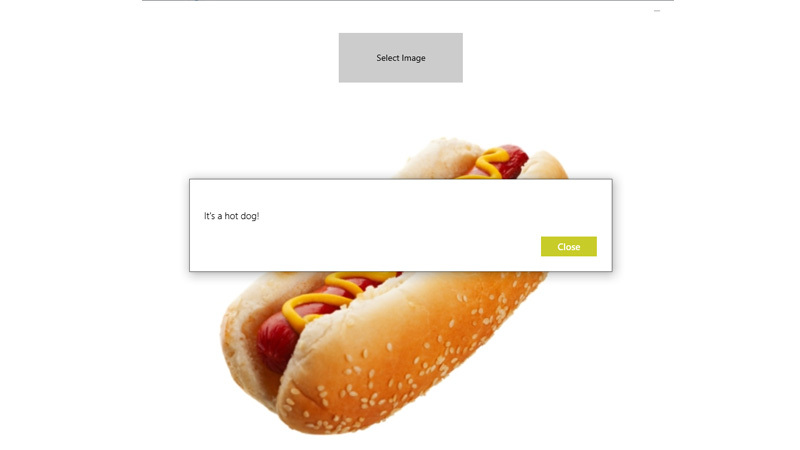

I recently trained a Custom Vision Service model to determine whether an image contains a hot dog, inspired by the famous NotHotDog app on the TV show Silicon Valley. Then I built a Windows app that lets you upload photos, and that tells you whether each photo contains a hot dog:

The source code is available on GitHub if you’d like to see how it works. Note that you will have to train your model and plug the authentication key and endpoint URL into the code for the app to work.

The intelligence APIs offered in Azure Cognitive Services enable software developers to infuse AI into their apps without becoming AI experts themselves, and they exemplify the continued evolution of cloud computing.

Jeff Prosise is a co-founder of Wintellect.

We will email when we make a new post in your interest area.